When Should Students Start Using AI?

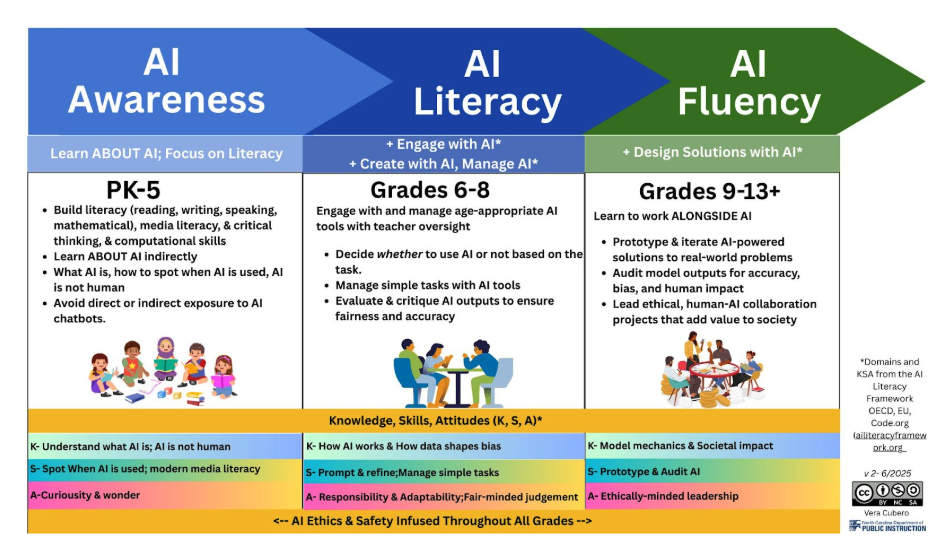

As a person passionate about education, I find frameworks like this one genuinely fascinating. This particular framework was developed in the US and transfers easily to our Australian context. There's much to admire here:

It's remarkably clear and accessible—Vera Cubero and team (OECD, etc.) have done excellent work

It divides "AI familiarity" into three distinct levels, and those in the field will recognise the importance of that differentiation

It separates knowledge, skills, and attitudes as distinct strands, focusing on specific elements within each

The scaffolding is beautifully done—you can see the continuum with ease

What the framework doesn't explicitly state, however, is when children actually begin using the tool. I find this crucial, and I would honestly add it. You can infer timing from the images accompanying each suggested phase, but I believe it needs to be more explicit. Why? Because I firmly believe children shouldn't use AI tools until they're mature enough for that responsibility. In Australia, this wouldn't happen until past Year 10.

One of the reasons for this position is my ongoing concern about the loss of skills and diminished cognitive effort I'm witnessing in students. Research continues to reveal shocking findings about what happens to us cognitively when we engage with generative AI and outsource work we're meant to do ourselves.

During last night's dinner conversation, my husband raised something I'd never connected before. There's a correlation between addiction and dopamine release without effort. This comes from a podcast he listens to regularly (and I, not quite as often) - Huberman Lab, in an episode called "Grow from Doing Hard Things". What does this mean? It suggests we can absolutely become addicted to generative AI tools - what's nicely called "reliance", because they offer us a pleasure moment of achievement where dopamine is released whilst the effort is essentially absent. We keep returning to these tools because that good moment happens again and again each time we interact and experience that feeling of accomplishment. Now, imagine a teenager experiencing this. The reliance will develop before we even realise it's there.

Think about how many times you've reached for an answer you could have found yourself, but it would have required a bit more than a couple of clicks. We must teach children to search for answers independently. To understand what's worth learning and develop their brains... and more importantly, how to actually do that. This can and should come through AI literacy.

But here's where the framework could be strengthened: perhaps the answer isn't simply delayed exposure, but rather structured exposure with enforced constraints. What if Years 8-9 students engaged with AI in analysis mode, examining outputs, identifying flaws, comparing approaches, rather than generation mode? This might build critical literacy without creating the dopamine-effort disconnect I'm concerned about.

A possible addition to the framework might look like this:

Phase 1 (Years 5-7): No direct tool use. Focus on conceptual understanding of AI systems, pattern recognition, and data literacy.

Phase 2 (Years 8-9): Supervised analytical engagement. Students examine AI outputs generated by teachers, critique them, and compare them to human work. No independent generation.

Phase 3 (Year 10+): Scaffolded generative use with explicit metacognitive requirements. Students must document their thinking process before using AI, compare their approach with AI output, and reflect on effort-reward relationships.

Of course, there's the uncomfortable reality that many students are already using these tools outside school. If a 13-year-old is using ChatGPT for homework regardless of school policy, does delaying school based AI literacy actually protect them? Or does it simply mean they're developing habits without guidance? This is where I struggle. There's a harm reduction argument here that genuinely unsettles me.

The framework is strong, but these questions around timing and cognitive development need explicit attention. We can't afford to assume that understanding when to introduce tool use is obvious, it's perhaps the most critical decision we'll make in AI literacy education.

What do you think? Does this framework, even with these additions, adequately address the neuroscience of adolescent development and technology dependence?